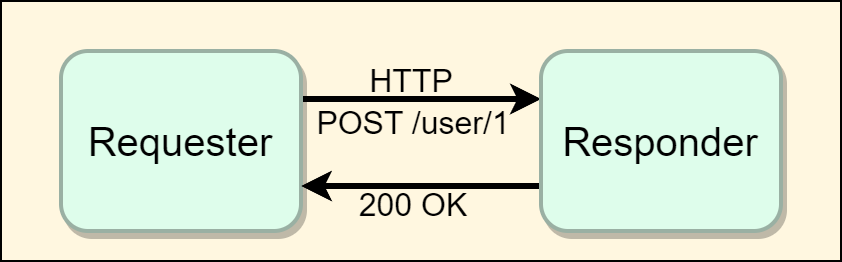

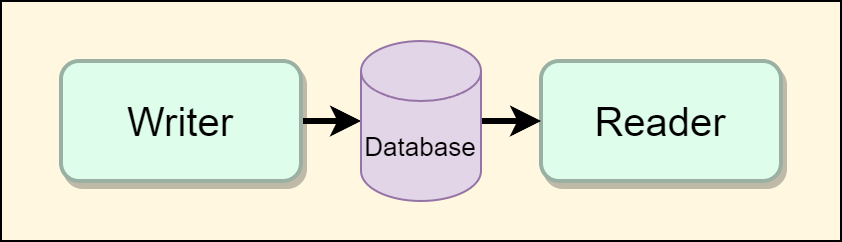

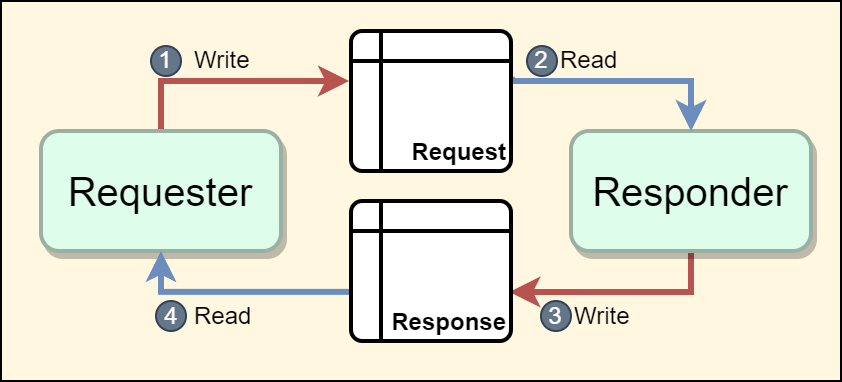

A distributed system is a network of components that communicate with each other to achieve some result. Two common ways for systems to communicate are: RPC (request/response) and data sharing (read/write)1.

In the case of RPC, remote procedure calls, a requester makes a service call to a “responder” service that handles the request and returns some response.

In the case of data sharing, a writer component writes some data to a persistence layer like files, databases, caches, or queues. Then some set of readers come along and read the data and process it in whatever way they find appropriate.

Large systems quickly become complex because any component can play multiple roles: it can be a requester into some systems, a responder for others, it can be a writer for some systems and a reader for others. The simpler model is still useful, though. Because when a change is being considered, usually only one of the roles of the component is impacted by the change.

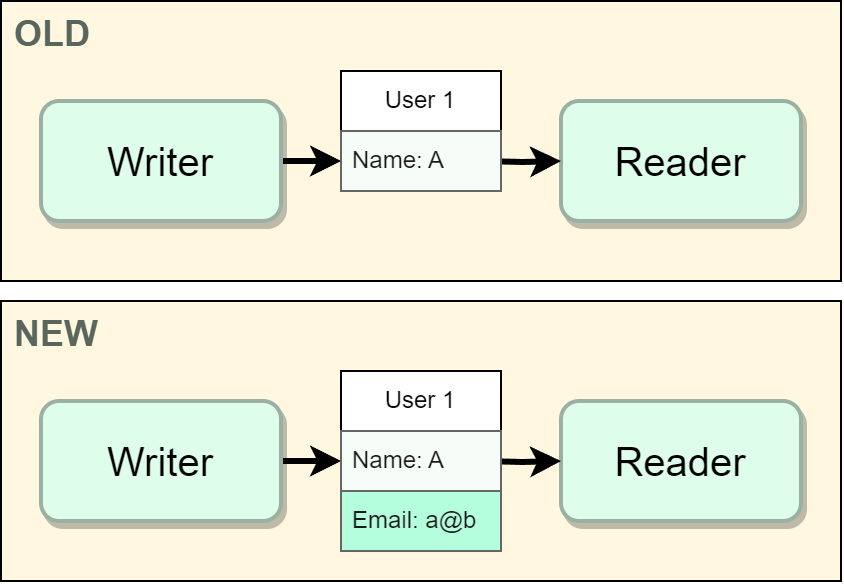

Adding a Field

Let’s start with the example that is usually not considered a breaking change. Let’s consider a new field being added to a database. For this change to be complete, we want our writers to write this new field and we want our readers to read the new database field.

If we update the reader to read the new field first, then we will break the system. The reader will try to read this new field but the field won’t be populated which will cause failures. If we update the reader and the writer simultaneously, then we still have a broken the system. This is because in distributed systems, components don’t deploy together and they don’t deploy atomically. Even if the reader and the writer live in the same monorepo, deployments are still not guaranteed to happen together.

So the right way to manage this would be to update the writer and deploy that. Then update any stale records (that won’t automatically get updated) via an ad-hoc one-time update. Once the database is in the right state and the updated writers are deployed, we can update the readers and deploy them.

This model works equally well for other persistence layers (files, caches, queues) as long as adding a new field is not a breaking operation in that serialization system2.

For request/response, this model applies just as well but the role of reader/writer depends on where the field is being added. If the new field is added to the request, then the requesters are the “writers”. All the requesters have to be updated before the responder service can access the new fields. If the new field is added to the response, then the “writers” are the responders. So in that situation, all the responders must be updated before the requesters can access the field. Again the same caveat exists, this only works if adding a new field is a non-breaking change in the specific serialization system.

Breaking Changes

In the previous example, it was okay to update the writers first because the “old” readers would still be able to handle events that the new writer was writing. The extra fields could just be ignored. However, this is no longer true when breaking changes are being made.

For example, let’s say we initially had designed this data structure for a phonebook:

Contacts {

"contact1_name": str,

"contact1_num": int,

"contact2_name": str,

"contact2_num": int

}

After realizing that a phonebook should have more than 2 contacts, we come up with this saner data structure:

Contact {

"name": str,

"phoneNumber": str

}

Contacts {

"contacts": Contact[],

"count": int

}

If we treated this as a non-breaking change and let our writers write this new data structure first, then all the readers will start failing. They will try to treat the data like the old data structure and will fail to parse it.

Similarly, if we treated this as a non-breaking change in an RPC situation then our responder services will start returning the new data structure. All the requesters will start failing because they won’t know how to handle the new data structure.

So how should this breaking change be deployed? There are two general answers: double write or forked read.

Double Write

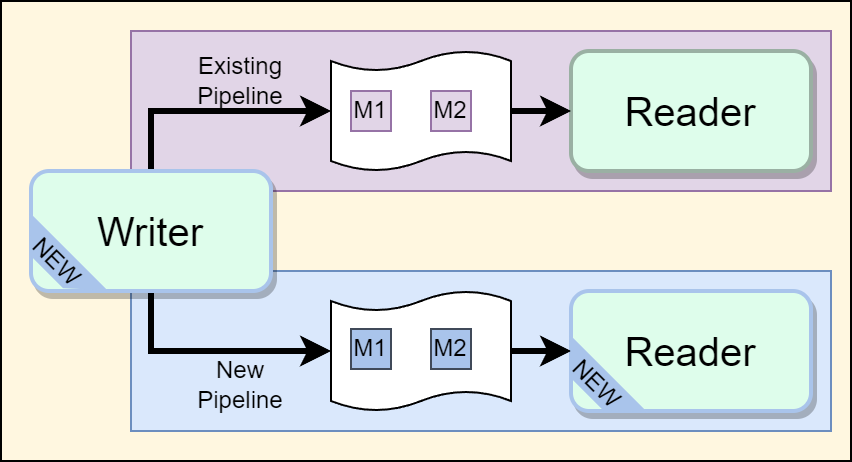

Since the goal is to protect the existing readers, we can protect them by not changing the data that they read at all. Instead, our writers can write the new data structure to a new location. They continue to write the old data structure to the same place as before but they also start writing the new data structure to a new location. The location depends on the type of data sharing system but here are some examples:

- Databases: New database or table.

- New fields can work as well if the new data structure can be represented as a field

- Files: New directory/filename

- Queues: New queue/topic

- Responder: The new response is only sent as part of a brand new request

- Or a full new service can be created with a new name

Once the writers are updated, the existing readers will continue to work since they are processing data from the old location. But now, individual readers can start migrating to consuming the new data structure when they are ready. After all the readers get updated to read from the new source, the writers can stop writing to the old location.

The advantage of this approach is that it provides a safe migration path for the readers. The readers can easily test consuming the new data structure because the writers are already writing it. The readers can safely deploy/undeploy their code in case they run into any issues.

The downside of this approach is that the writers have to carry an extra burden for an undetermined amount of time. The double writing results in a performance hit and it doubles the resource usage. Additionally, the code base of the writer has to continue to maintain the logic of writing two different data structures. This code cannot be removed till all the readers are migrated.

Forked Read

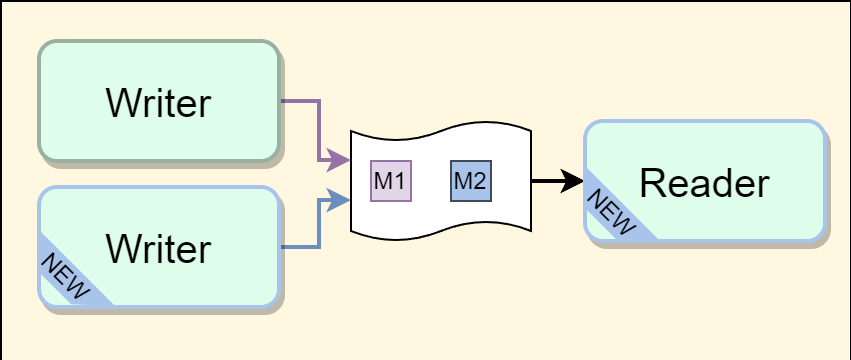

Another way to protect the readers is by adding logic to the readers themselves. The readers can be upgraded so that they can handle the old and the new data structure. The simplest way to achieve this would be for the reader to try to serialize the data using the old format. If that succeeds, it can keep following its existing processing. If it fails though, it can try to serialize the data using the new format and if that succeeds, it can follow the new processing.

Specific serialization systems may allow for cleaner logic to avoid parsing the message twice. For example, for JSON you can just check if specific fields exist. For protobuf, you can just check the type of the resulting message. Other serialization formats will let you check the version of the message. However it’s achieved, the end result is that you fork off your processing depending on whether you are reading the old data structure or the new data structure.

Once all the readers have been migrated to do these forked reads, the writers can be updated to start writing the new format.

The advantage of this approach is that you can avoid double writes which can be a concern when the writes are expensive for a system. Another advantage is that all the systems can switch to using the new data structure around the same time. In the double-write system, each reader onboards at its own pace. In this forked-read system, all readers start getting the new data structure around the same time.

There are disadvantages though. Since most systems have more readers than writers, this strategy requires more systems to implement and maintain the forked-read processing. Another disadvantage is that this system can be harder to test than the double-writes system. In the double-writes system, the new format is already being written so the readers can test against it. In the forked read system, the new format is not being written till all the readers migrate so each reader has to figure out how to test their logic and hope that the updated writers will match that behavior.

Due to these disadvantages, the forked read strategy is not the first choice for data sharing systems (databases, caches, queues). In the RPC world though, this strategy is more common when the request itself is changing. Recall that when we are talking about evolving the request, the requester is the writer and the responder service is the reader. Since there is usually only one “reader” here and since services are expected to be tested without a specific client in mind anyways, the disadvantages discussed above don’t apply. Responder services should ideally add a new request endpoint for processing this new data structure but if that’s not possible, forked reads can be a strategy to allow a service to handle two distinct data structures in the same request endpoint.

Conclusion

Making breaking changes is difficult and requires a lot of effort and coordination. Prefer not making breaking changes. However, when you do make a breaking change do it knowingly and with a plan.

For RPC (request/response) systems, prefer adding a new request and migrating requesters to the new request. If you must keep the same endpoint, consider doing a forked read to minimize the need to coordinate with all your callers simultaneously.

For data sharing systems (databases, caches, files, queues), prefer doing a double write so the existing readers continue working as-is. Then onboard readers onto the new system as they are updated. If double writes are too expensive then you have to fall back to the forked reads. Find ways to help the readers test their forked reads well before you start pushing your updated writers to production!

Queueing systems can be considered a special case of RPC (request with no response) but I like to think of them as a special case of data sharing. Instead of writing to a database, a writer writes to a queue and many readers read from it. ↩︎

Most serialization formats will support appending new fields as a non-breaking operation (JSON, XML, protobuf, YAML, etc). Systems that don’t know about the new field will safely ignore it. Optimized/specialized/home-grown serialization formats might not make the same guarantees. ↩︎